GemmParser Class Reference

Parses an ONNX Gemm operator into a LayerNode. More...

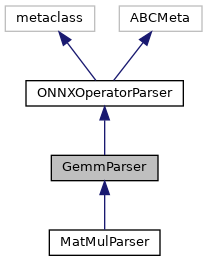

Inheritance diagram for GemmParser:

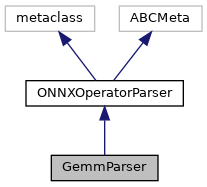

Collaboration diagram for GemmParser:

Public Member Functions | |

| LayerNode | run (self) |

| Run the parser. More... | |

| dict[str, Any] | get_layer_node_user_format (self, list[int] input_shape, list[int] output_shape) |

| Generate layer data in user input format for MatMul or GEMM ONNX nodes. More... | |

| def | generate_layer_node (self) |

| list[int] | infer_input_activation_shape (self, list[int] output_shape) |

| In case the input activations are empty (which can happen if there is a shape operator in the path): extract the weights from the model graph initializer to get the correct input activation size. More... | |

Public Member Functions inherited from ONNXOperatorParser Public Member Functions inherited from ONNXOperatorParser | |

| None | __init__ (self, int node_id, NodeProto node, dict[int, Any] nodes_outputs, ModelProto onnx_model, *list[dict[str, Any]]|None mapping_data=None, Accelerator|None accelerator=None) |

| def | get_input_output_weight_data_type (self) |

| Return the data type of the input, output and weight tensors of this node. More... | |

| def | get_weight_name (self, NodeProto node) |

| Return the name of the weight input of this node depending on its operator type. More... | |

| list[int] | get_node_predecessors (self) |

| Compute node input sources. More... | |

| def | get_operand_source_user_format (self, list[int] predecessors) |

| Set input source and indicate constant operands. More... | |

| def | get_weight_precision (self) |

| Return the weight precision for this node. More... | |

| def | get_activation_precision (self) |

| Return the activation precision for this node. More... | |

| def | get_intermediate_output_precision (self) |

| Return the intermediate output precision for this node. More... | |

Additional Inherited Members | |

Public Attributes inherited from ONNXOperatorParser Public Attributes inherited from ONNXOperatorParser | |

| node_id | |

| node | |

| nodes_outputs | |

| onnx_model | |

| mapping_data | |

| accelerator | |

Static Public Attributes inherited from ONNXOperatorParser Static Public Attributes inherited from ONNXOperatorParser | |

| string | CUSTOM_WEIGHT_SIZE_ATTR = "weight_size" |

| string | CUSTOM_ACT_SIZE_ATTR = "act_size" |

| string | CUSTOM_OUTPUT_SIZE_ATTR = "output_size" |

Detailed Description

Parses an ONNX Gemm operator into a LayerNode.

Member Function Documentation

◆ generate_layer_node()

| def generate_layer_node | ( | self | ) |

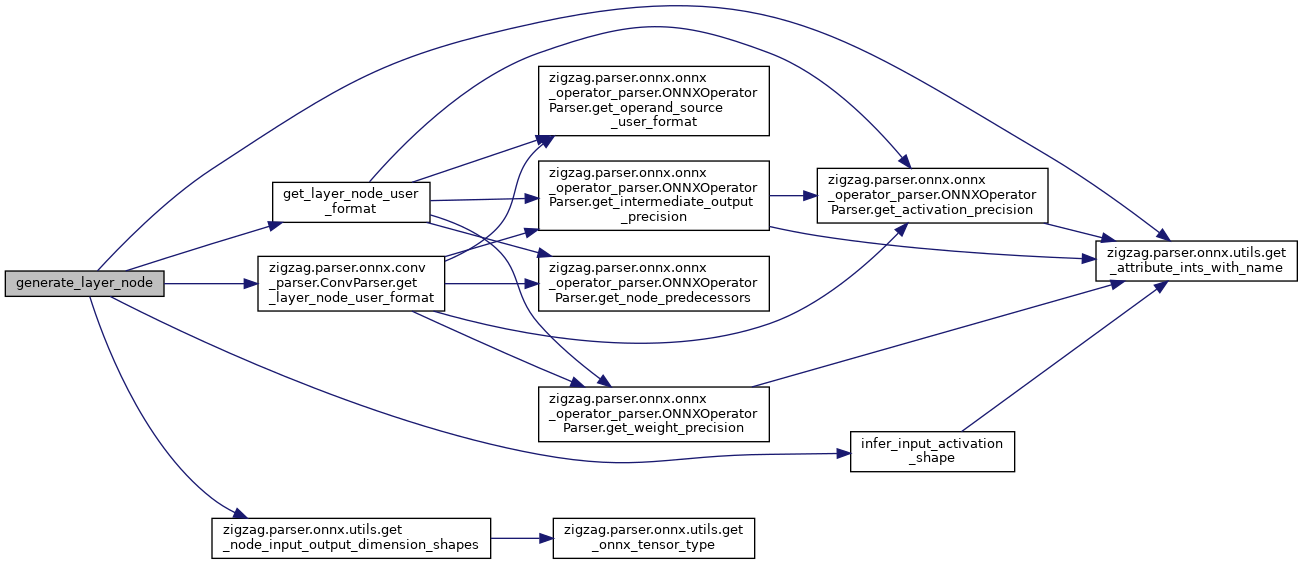

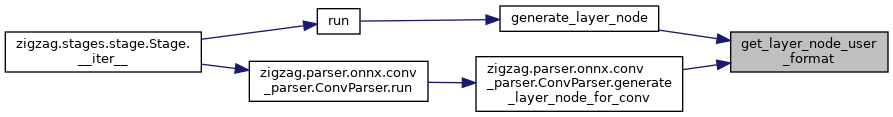

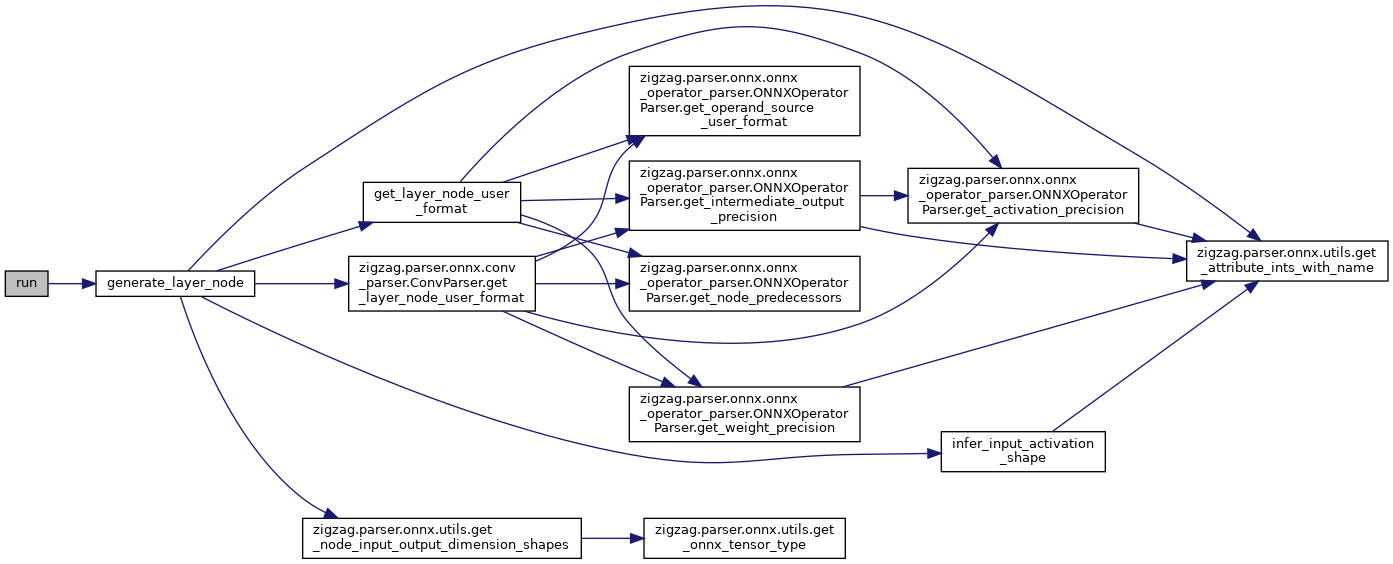

Here is the call graph for this function:

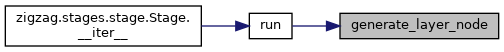

Here is the caller graph for this function:

◆ get_layer_node_user_format()

| dict[str, Any] get_layer_node_user_format | ( | self, | |

| list[int] | input_shape, | ||

| list[int] | output_shape | ||

| ) |

Generate layer data in user input format for MatMul or GEMM ONNX nodes.

I[B] [D][C] * W([B]) [C][K]-> O [B] [D][K] or I[B][H][D][C] * W([B][H])[C][K]-> O [B][H][D][K]

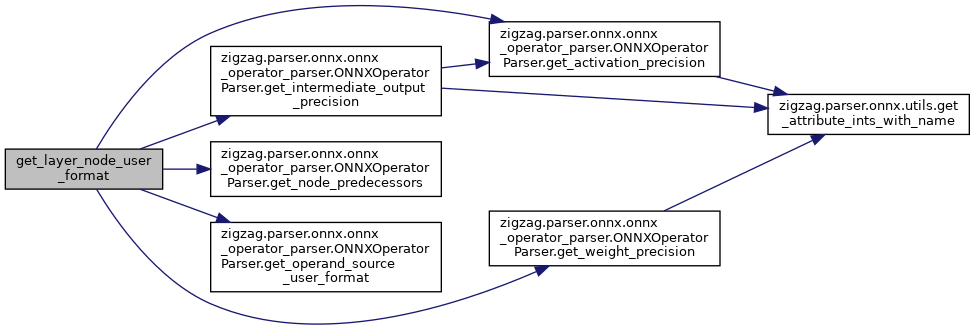

Here is the call graph for this function:

Here is the caller graph for this function:

◆ infer_input_activation_shape()

| list[int] infer_input_activation_shape | ( | self, | |

| list[int] | output_shape | ||

| ) |

In case the input activations are empty (which can happen if there is a shape operator in the path): extract the weights from the model graph initializer to get the correct input activation size.

NOTE: only implemented for tensors with dimension size 2.

TODO having a shape operator in the ONNX graph should be dealt with at a higher level

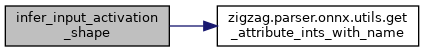

Here is the call graph for this function:

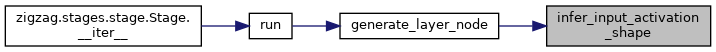

Here is the caller graph for this function:

◆ run()

| LayerNode run | ( | self | ) |

Run the parser.

Reimplemented from ONNXOperatorParser.

Here is the call graph for this function:

Here is the caller graph for this function:

The documentation for this class was generated from the following file:

- /home/runner/work/zigzag/zigzag/zigzag/parser/onnx/gemm_parser.py