DefaultNodeParser Class Reference

This class parses an ONNX node into a DummyNode. More...

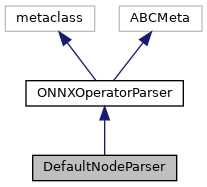

Inheritance diagram for DefaultNodeParser:

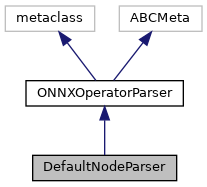

Collaboration diagram for DefaultNodeParser:

Public Member Functions | |

| DummyNode | run (self) |

| Run the parser. More... | |

| DummyNode | generate_dummy_node (self) |

Public Member Functions inherited from ONNXOperatorParser Public Member Functions inherited from ONNXOperatorParser | |

| None | __init__ (self, int node_id, NodeProto node, dict[int, Any] nodes_outputs, ModelProto onnx_model, *list[dict[str, Any]]|None mapping_data=None, Accelerator|None accelerator=None) |

| def | get_input_output_weight_data_type (self) |

| Return the data type of the input, output and weight tensors of this node. More... | |

| def | get_weight_name (self, NodeProto node) |

| Return the name of the weight input of this node depending on its operator type. More... | |

| list[int] | get_node_predecessors (self) |

| Compute node input sources. More... | |

| def | get_operand_source_user_format (self, list[int] predecessors) |

| Set input source and indicate constant operands. More... | |

| def | get_weight_precision (self) |

| Return the weight precision for this node. More... | |

| def | get_activation_precision (self) |

| Return the activation precision for this node. More... | |

| def | get_intermediate_output_precision (self) |

| Return the intermediate output precision for this node. More... | |

Additional Inherited Members | |

Public Attributes inherited from ONNXOperatorParser Public Attributes inherited from ONNXOperatorParser | |

| node_id | |

| node | |

| nodes_outputs | |

| onnx_model | |

| mapping_data | |

| accelerator | |

Static Public Attributes inherited from ONNXOperatorParser Static Public Attributes inherited from ONNXOperatorParser | |

| string | CUSTOM_WEIGHT_SIZE_ATTR = "weight_size" |

| string | CUSTOM_ACT_SIZE_ATTR = "act_size" |

| string | CUSTOM_OUTPUT_SIZE_ATTR = "output_size" |

Detailed Description

This class parses an ONNX node into a DummyNode.

Member Function Documentation

◆ generate_dummy_node()

| DummyNode generate_dummy_node | ( | self | ) |

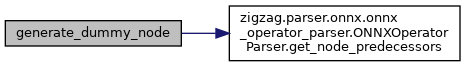

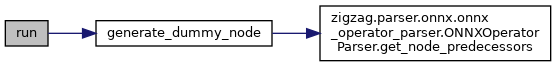

Here is the call graph for this function:

Here is the caller graph for this function:

◆ run()

| DummyNode run | ( | self | ) |

Run the parser.

Reimplemented from ONNXOperatorParser.

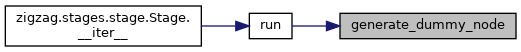

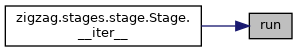

Here is the call graph for this function:

Here is the caller graph for this function:

The documentation for this class was generated from the following file:

- /home/runner/work/zigzag/zigzag/zigzag/parser/onnx/default_node_parser.py