Class that handles optimization of temporal mapping given a: More...

Public Member Functions | |

| def | __init__ (self, *Accelerator accelerator, LayerNode layer, SpatialMappingInternal spatial_mapping, TemporalMappingType mapping_type, int|None loma_lpf_limit=None, **Any kwargs) |

| The memory hierarchy from the correct core is extracted from the accelerator. More... | |

| None | set_constraints (self, list[PermutationConstraint] constraints) |

| Generator[TemporalMapping, None, None] | run (self) |

| Runs the LomaEngine. More... | |

| def | get_temporal_loops (self) |

| Get all loops that have to be temporally scheduled given layer and spatial mapping. More... | |

| def | update_min_lpf_factor (self, dict[LayerDim, UnrollFactor] loop_sizes) |

| None | get_prime_factors (self) |

| Get the prime factors for all temporal loops. More... | |

| def | compute_nb_permutations (self) |

| Compute the number of permutations that will have to be considered given the LPF distribution. More... | |

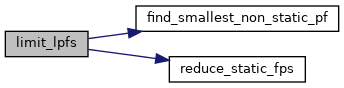

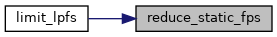

| def | reduce_static_fps (self) |

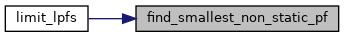

| tuple[int, int] | find_smallest_non_static_pf (self, LayerDim layer_dim) |

| None | limit_lpfs (self) |

| Function to limit the total number of loop prime factors present in this instance. More... | |

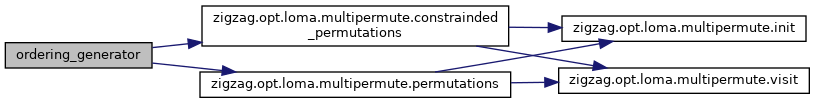

| Generator[list[tuple[LayerDim, int]], None, None] | ordering_generator (self) |

| Generator that yields all orderings of the temporal loops. More... | |

Detailed Description

Class that handles optimization of temporal mapping given a:

- layer

- spatial mapping

- a memory hierarchy

This optimization is carried out through loop order based memory allocation. For each ordering of the temporal loops, they are allocated bottom-up to the levels in the memory hierarchy.

See https://ieeexplore.ieee.org/document/9458493 for more details.

Constructor & Destructor Documentation

◆ __init__()

| def __init__ | ( | self, | |

| *Accelerator | accelerator, | ||

| LayerNode | layer, | ||

| SpatialMappingInternal | spatial_mapping, | ||

| TemporalMappingType | mapping_type, | ||

| int | None | loma_lpf_limit = None, |

||

| **Any | kwargs | ||

| ) |

The memory hierarchy from the correct core is extracted from the accelerator.

- Parameters

-

accelerator accelerator to use the memory hierarchy of layer layer to generate temporal mappings for spatial_mapping SpatialMapping to use loma_lpf_limit kwargs further unused, for ease of calling only

Member Function Documentation

◆ compute_nb_permutations()

| def compute_nb_permutations | ( | self | ) |

Compute the number of permutations that will have to be considered given the LPF distribution.

◆ find_smallest_non_static_pf()

| tuple[int, int] find_smallest_non_static_pf | ( | self, | |

| LayerDim | layer_dim | ||

| ) |

◆ get_prime_factors()

| None get_prime_factors | ( | self | ) |

Get the prime factors for all temporal loops.

This is saved in three separate class attributes (temporal_loop_pfs, temporal_loop_pf_counts, temporal_loop_pf_count_sums)

TODO clean up (functions should not change class state variables...)

◆ get_temporal_loops()

| def get_temporal_loops | ( | self | ) |

Get all loops that have to be temporally scheduled given layer and spatial mapping.

TODO clean up (and make use of LayerDimSizes methods)

◆ limit_lpfs()

| None limit_lpfs | ( | self | ) |

Function to limit the total number of loop prime factors present in this instance.

This function scans the lpfs and while the number of lpfs is greater than self.lpf_limit it:

- picks the loop dimension that has the most lpfs

- merges the smallest two lpfs of that loop dimension (multiplying their values)

◆ ordering_generator()

| Generator[list[tuple[LayerDim, int]], None, None] ordering_generator | ( | self | ) |

Generator that yields all orderings of the temporal loops.

◆ reduce_static_fps()

| def reduce_static_fps | ( | self | ) |

◆ run()

| Generator[TemporalMapping, None, None] run | ( | self | ) |

Runs the LomaEngine.

- Returns

- Generator that yields all temporal mappings

◆ set_constraints()

| None set_constraints | ( | self, | |

| list[PermutationConstraint] | constraints | ||

| ) |

◆ update_min_lpf_factor()

| def update_min_lpf_factor | ( | self, | |

| dict[LayerDim, UnrollFactor] | loop_sizes | ||

| ) |

Member Data Documentation

◆ accelerator

| accelerator |

◆ constraints

| constraints |

◆ has_constraints

| has_constraints |

◆ layer

| layer |

◆ lpf_limit

| lpf_limit |

◆ lpfs

| lpfs |

◆ mapping_type

| mapping_type |

◆ memory_hierarchy

| memory_hierarchy |

◆ nb_permutations

| nb_permutations |

◆ show_progress_bar

| show_progress_bar |

◆ spatial_mapping

| spatial_mapping |

◆ temporal_loop_dim_size

| temporal_loop_dim_size |

◆ temporal_loop_pf_count_sums

| temporal_loop_pf_count_sums |

◆ temporal_loop_pf_counts

| temporal_loop_pf_counts |

The documentation for this class was generated from the following file:

- /home/runner/work/zigzag/zigzag/zigzag/opt/loma/engine.py