Parses the ONNX model into a workload. More...

Public Member Functions | |

| None | __init__ (self, str|ModelProto onnx_model, str mapping_yaml_path) |

| ONNXWorkload | run (self) |

| Iterate through the onnx model and generate the workload consisting of LayerNodes and DummyNodes. More... | |

| def | get_parser_class (self, NodeProto node) |

| def | parse_workload_from_onnx_model_and_mapping (self) |

| Converts an onnx model into a workload object. More... | |

Public Attributes | |

| onnx_model | |

| workload | |

| mapping_yaml_path | |

| mapping_data | |

Detailed Description

Parses the ONNX model into a workload.

Constructor & Destructor Documentation

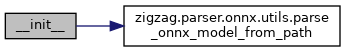

◆ __init__()

| None __init__ | ( | self, | |

| str | ModelProto | onnx_model, | ||

| str | mapping_yaml_path | ||

| ) |

Member Function Documentation

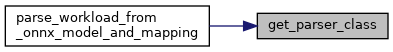

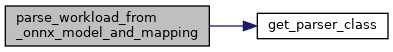

◆ get_parser_class()

| def get_parser_class | ( | self, | |

| NodeProto | node | ||

| ) |

◆ parse_workload_from_onnx_model_and_mapping()

| def parse_workload_from_onnx_model_and_mapping | ( | self | ) |

Converts an onnx model into a workload object.

We scan the model for all convolutional layers, and setup a Layer object for each of those using the mapping. Then we combine the layers into a workload graph.

If the model isn't in the format with external data, it will be slow to manipulate it, so better to work with raw models with external data. The line below accomplishes this. onnx.save_model(model, 'model_external.onnx', save_as_external_data=True, all_tensors_to_one_file=True, location='model_external_raw_data', size_threshold=1024, convert_attribute=False)

In the future, assume we will have a model saved with external data, then we have to execute the code below if the model isn't inferred yet

This approach is faster for large models because the raw model is used (w/o the external data) if model is not inferred: onnx.shape_inference.infer_shapes_path('path/to/the/model.onnx') # This will save the inferred model to the same file model = onnx.load('path/to/the/model.onnx') # reload the inferred model

Saves for each node_id the inputs and outputs tensor names

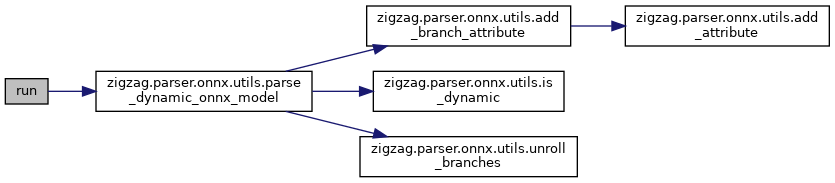

◆ run()

| ONNXWorkload run | ( | self | ) |

Iterate through the onnx model and generate the workload consisting of LayerNodes and DummyNodes.

Member Data Documentation

◆ mapping_data

| mapping_data |

◆ mapping_yaml_path

| mapping_yaml_path |

◆ onnx_model

| onnx_model |

◆ workload

| workload |

The documentation for this class was generated from the following file:

- /home/runner/work/zigzag/zigzag/zigzag/parser/onnx/onnx_model_parser.py